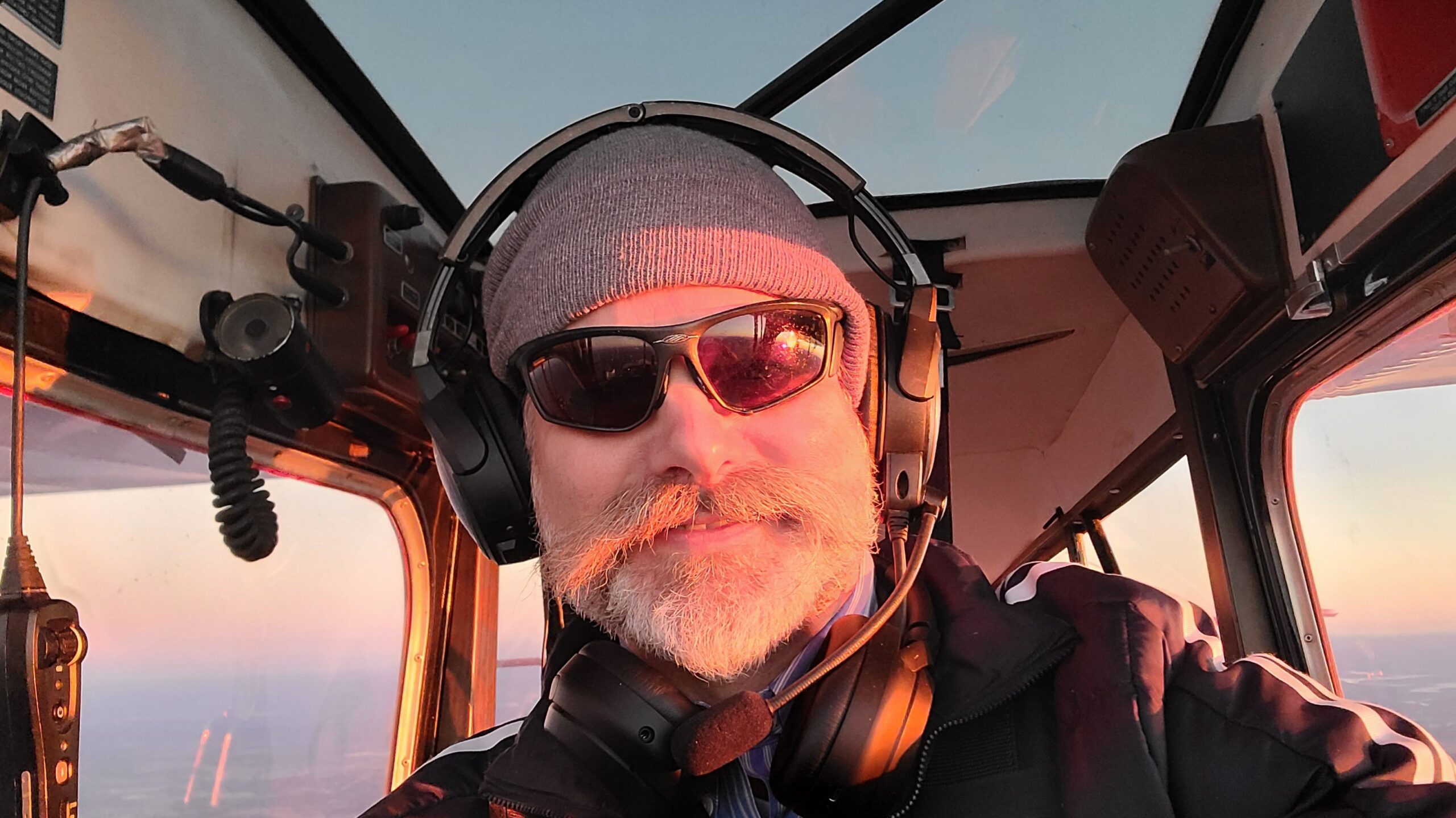

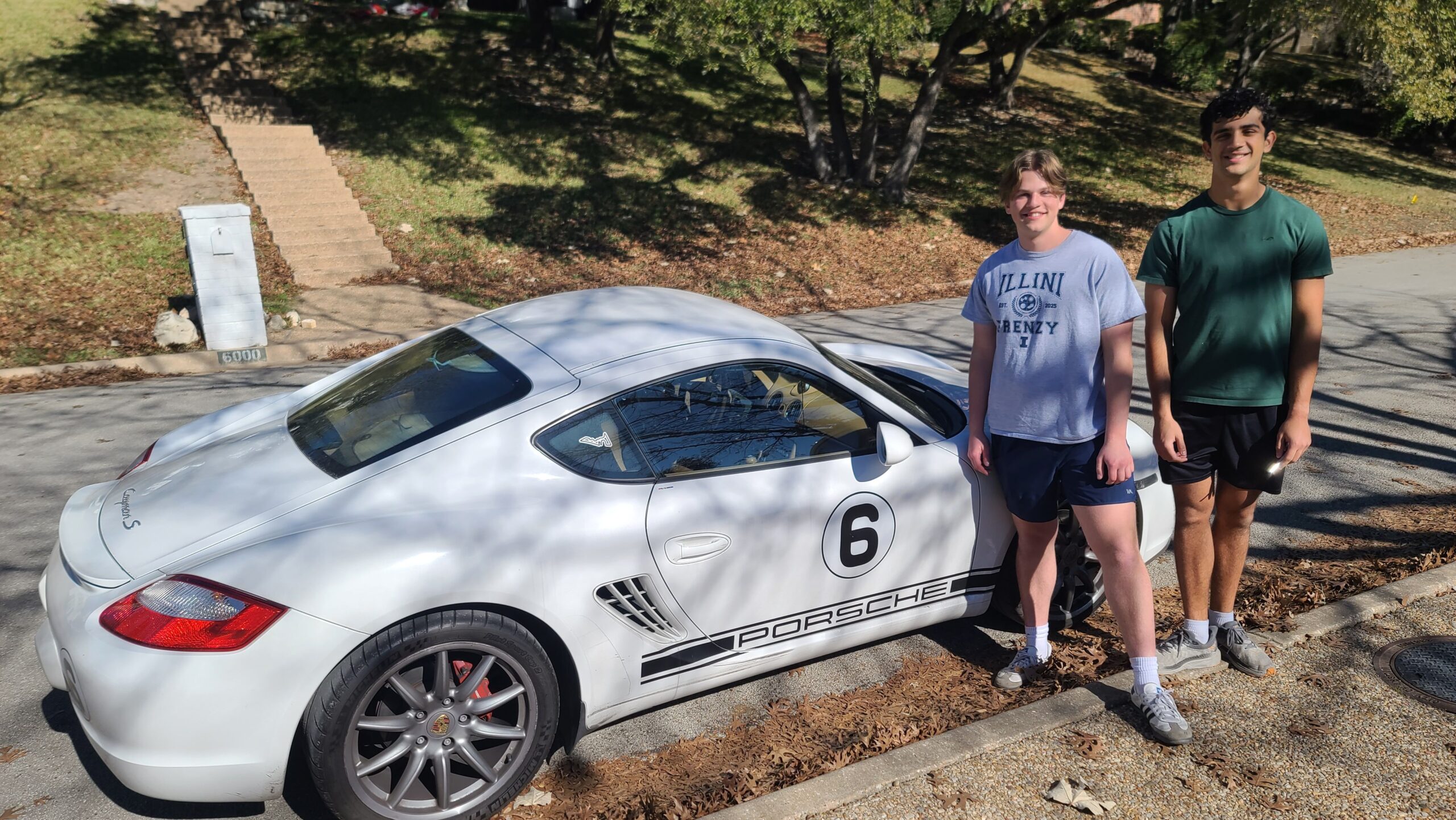

Ro graduated to drive ‘Cayman 6’ by himself.

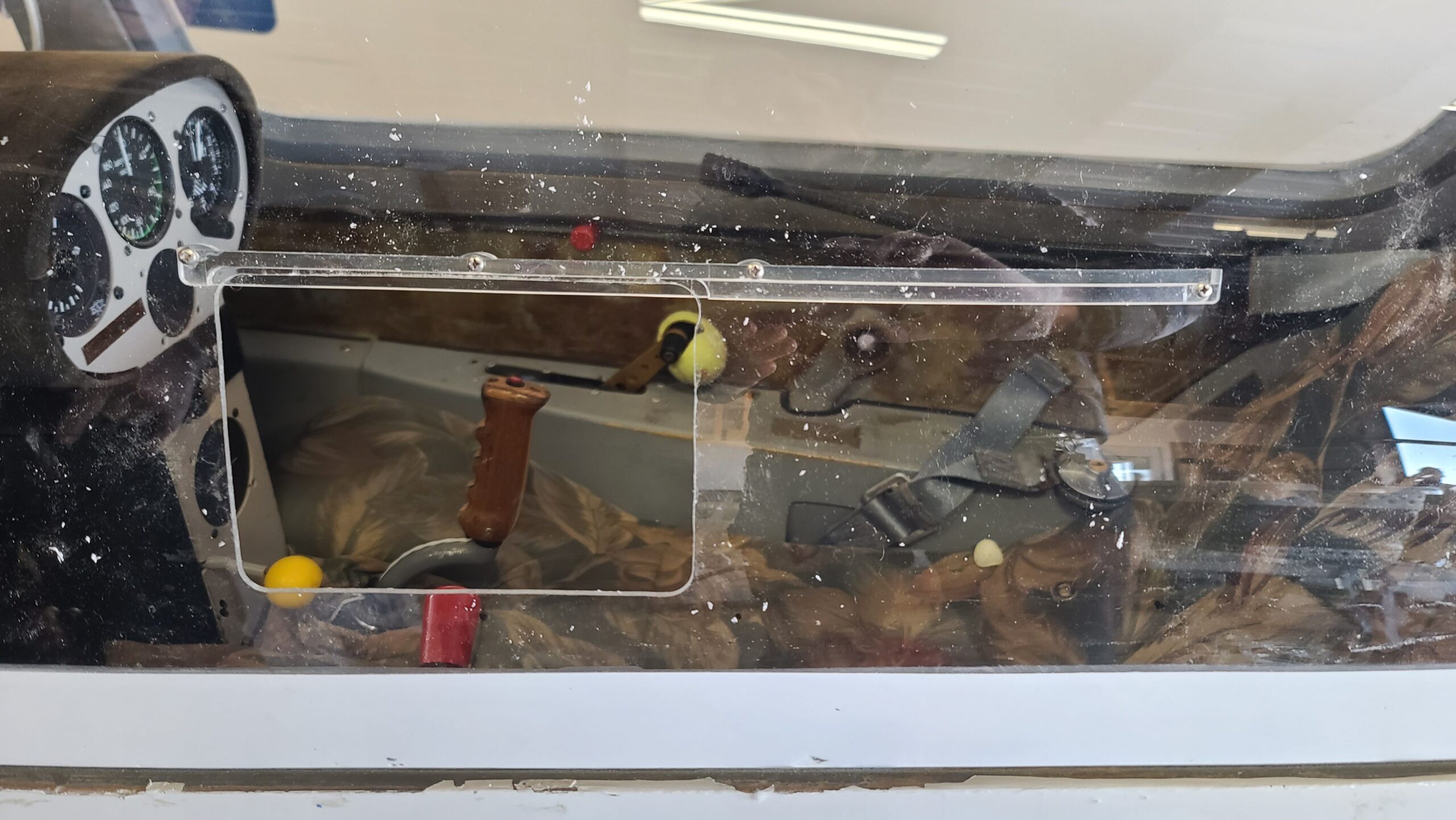

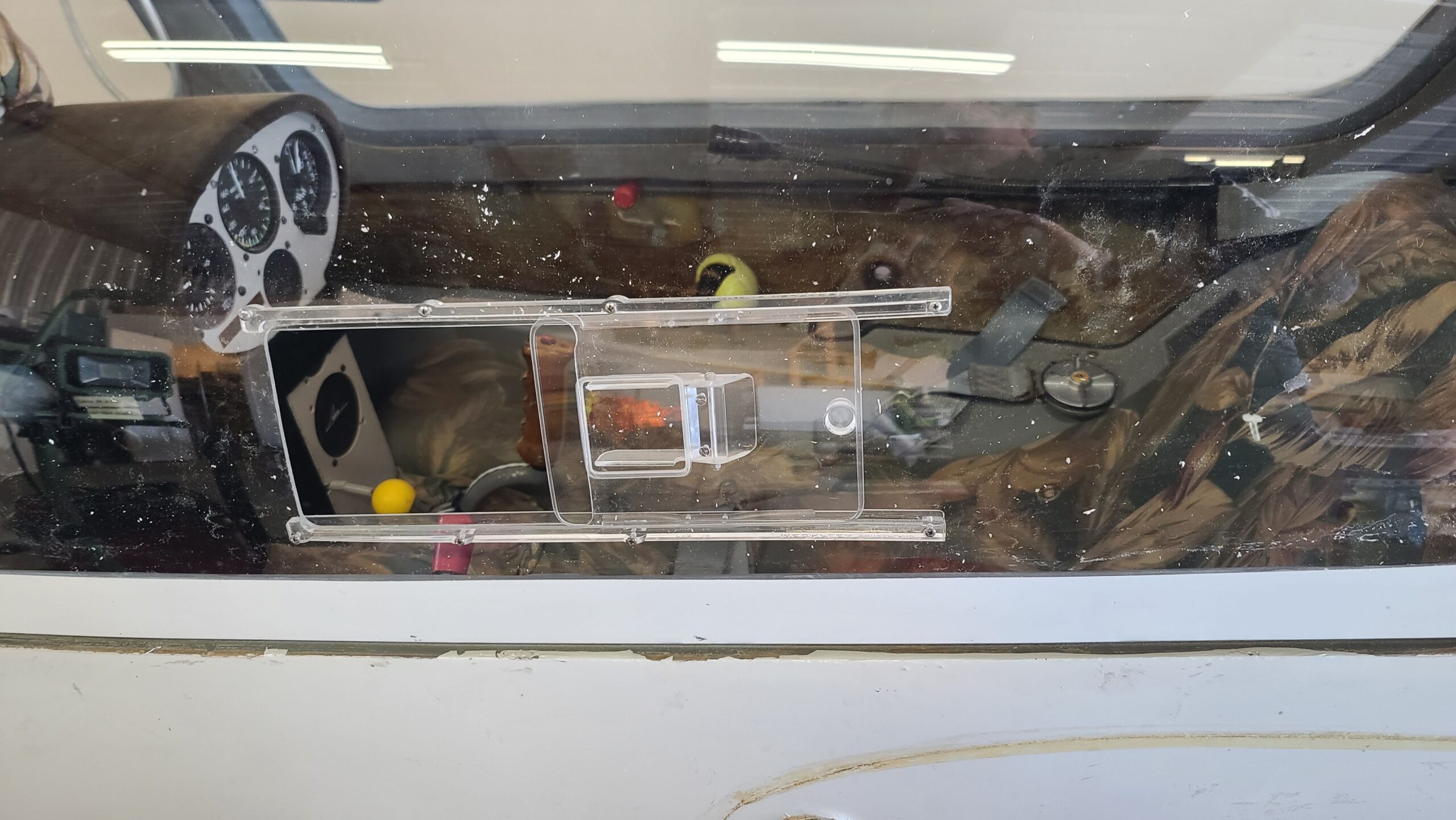

With Ro moving out soon, and our standard trip being 600NM nonstop to Colorado, I now no longer require four seats. So instead of operating the Bonanzas at around $240/hr wet, I can now downsize to an experiemental 2-seat aircraft.

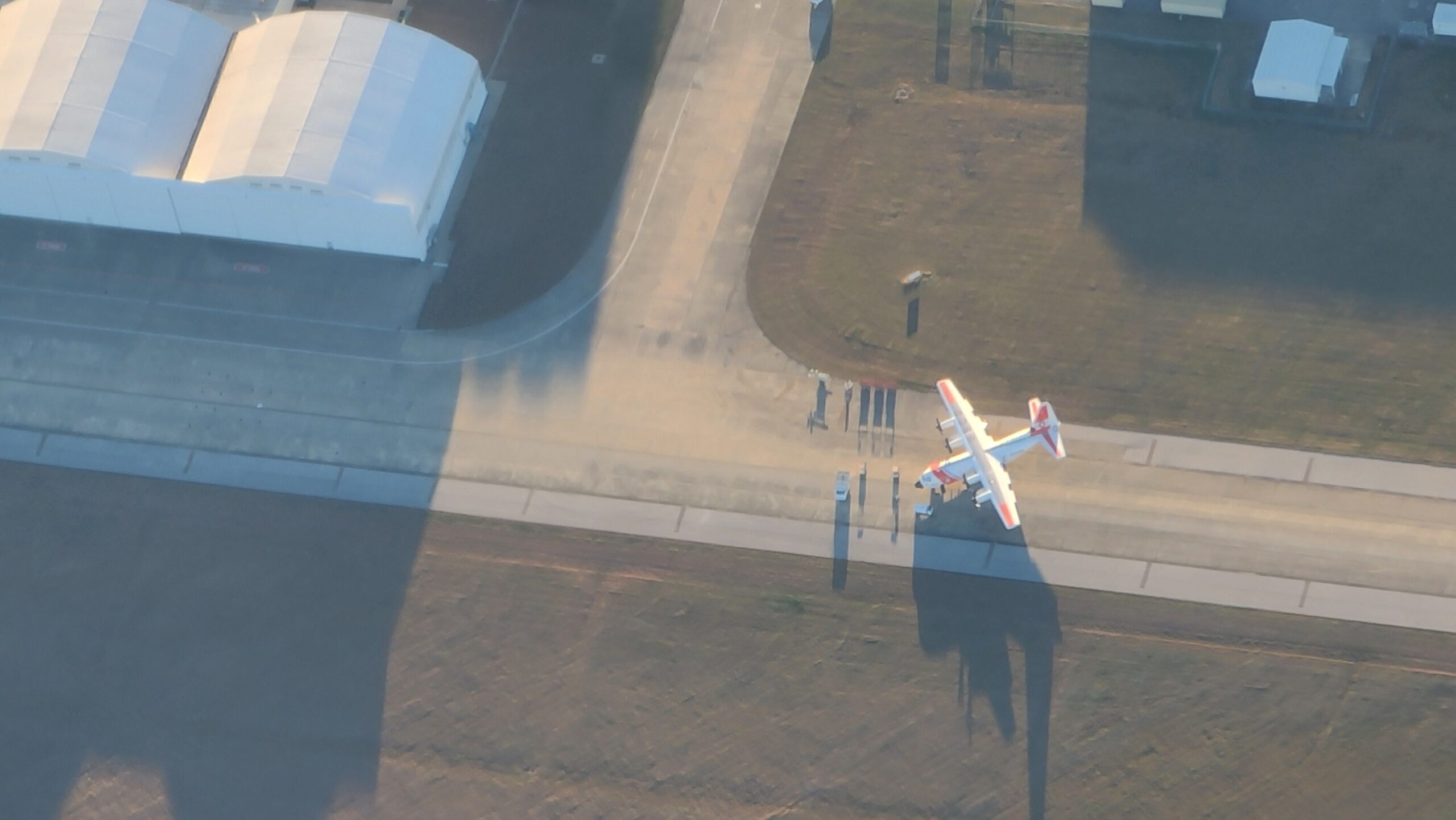

The short list of planes I’m looking at: